The uncanny world

Jamais vu: or how to go beyond the pale.

“Maybe it’s just the propaganda from the newsmachines, but in the last month I’ve gotten weary of all this. Everything seems so grim and serious, no colour to life.”

“Do you think the war is in vain?” the older man said suddenly. “You are an integral part of it, yourself.”

Philip K. Dick. (1955). A Handful of Darkness. “Expendable”.

A 68-year old woman found herself scammed out of her life savings after it turned out that she wasn’t actually texting with NCIS star Mark Harmon, and that his requests to borrow a couple of hundred thousand dollars to pay off his crew in Italy were less than genuine.

In another case, a Reddit user reports that his mom is out tens of thousands of dollars, also to help Mark Harmon — to put out wildfires, to get out of the Philippines, and to divorce his wife.

It’s easy to scoff at such ostensible stupidity. Too easy. The massive increase in online fraud of this kind is not simply because people are getting dumber. The answer is rather that an intense faux-relationship often just seems much more real than anything else available, especially to a post-covid precariat, starved for human connection and validation. When the entire world is just fake and disconnected, little will be more attractive than the mere prospect of a genuine reciprocal relationship with someone we’ve already formed a significant parasocial attachment towards.

Enter then the downward spiral of our collective attachment to agential AI. The socio-technical phenomenon is designed to reproduce the very conditions for its own entrenchment, further impoverishing our relations, undermining human connection, degrading the content of culture, and finally epistemic trust itself.

And here we are, with everything slowly becoming uncanny. Becoming doubtful. Not-quite-right.

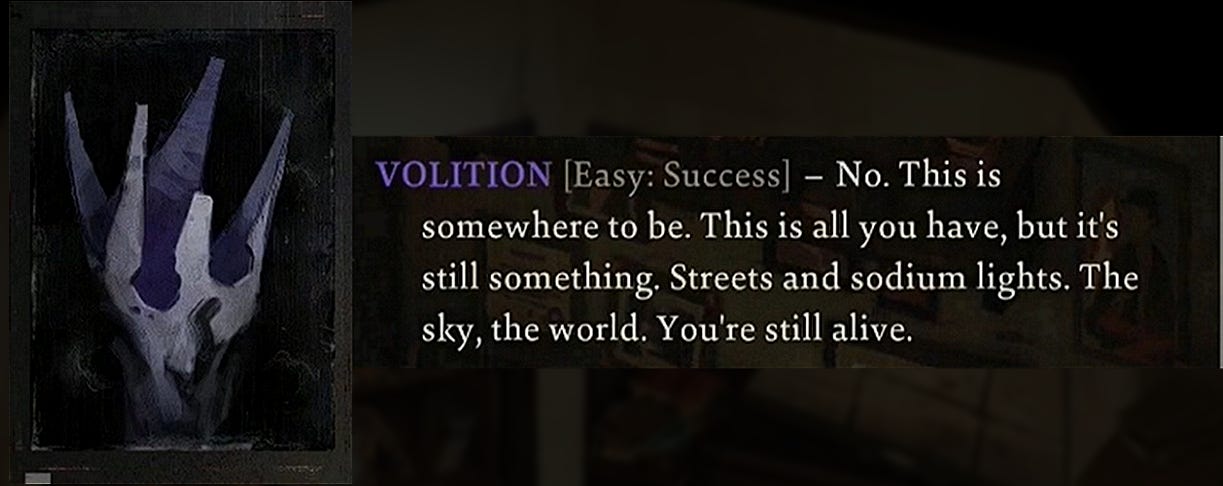

In the narrative background of the 2019 video game Disco Elysium, the world is permated by a sort of chaos substance, whose gradual and relentless spread implies some final and ineffable entropic doom in the not too far future. It’s called “the pale”.

I don’t know when Kurvitz first wrote about this stuff, exactly, but as a story device, it’s obviously in reflection of the creeping apocalyptic sentiment of a modernity under an end-stage capitalism that pushes against ecological and human limits alike. It’s about the unstated meta-narrative behind climate change, millennarianism, peak oil, the singularity, and post-apocalyptic zombie fiction all at once, and the notion in the story really manages to penetrate to some depth into the existential psychology underlying these modern preoccupations.

But as of recently, I’ve found that the pale of Disco Elysium, as a metaphor, is also eerily reminiscent of our current situation of epistemic decoherence. Of the radical and relentless decay of meaning in what now, for lack of a better word, passes for “public discourse”.

So people are now, on a broader scale, literally outsourcing their own thinking to generative AI. To the mass media spectactle. I’m sure most of your have seen your fair share of slop blog posts and reddit threads, not to mention the ubiquitous garbage youtube channels, all courtesy of some clickbait farm in the concrete jungles of Stockholm or Saigon. This would be bad enough on its own, but now, we’re increasingly seeing how the regular run-of-the-mill, salt-of-the-earth are substituting their own thinking with LLM output.

I first observed it in various public forums, where people suddenly started trying to pass off AI slop as their own “research”. And the peculiar thing was that this stuff wasn’t sheepishly foisted upon the other participants as a perhaps helpful AI summary, but posted as if these were the very own words of whoever started the thread.

More often than not, these people also had a strangely self-assured attitude, seemingly almost convinced of their own brilliance through simply copypasting an incoherent summary with a smattering of two-dollar terminology they barely understood.

And on some level I get that students are tempted to use this stuff to cut corners and fake their way through time-consuming assignments, entirely aware that they’re cheating just as much as if they’d literally paid some kid in Taiwan to write their esssay for them. It’s when people voluntarily participate in public conversations through outsourcing their own thinking to an LLM, and without anything to gain from it whatsoever, that I feel we’re entering the Twilight Zone.

When confronted, these people tend to give me two types of responses. Many will own up to it, and simply argue that “the AI” helps them flesh out and communicate their ideas, that they provide the outline while the LLM just fills in the gaps, facilitating and streamlining their thinking. This is patently stupid, of course, but it’s at least honest and forthright on some basic level.

Others are more slippy, and immediately challenge the assertion that they’ve used a slop generator to falsify their own output, and here’s where things get tricky. A common retort is based around the suggestion that no human being could ever reliably identify AI slop, and that anyone who suggests otherwise is simply jealous of their incredible abilities of exposition and vast knowledge.

And the issue is precisely that this doesn’t come off as obviously inane. That it seems genuinely difficult for increasing numbers of people to immediately pick out what’s AI-generated in the midst of actual human output. And since anyone with even just a couple of brain cells to rub together who’s ever read a novel in their lives will under normal circumstances, entirely without effort, be able to recognize raw AI text slop if it’s sizeable enough, we should be very worried if people increasingly are struggling in this respect.

But the really remarkable, car-crash fascinating phenomenon in the midst of all this, is when people seem to genuinely believe that the AI slop is somehow their own creative output.

It’s sometimes expressed through people thinking they’ve “trained” the LLM to meaningfully speak in their own voice so that the prompts now function as a kind of streamlined shorthand for their own thought and reflection. Others maintain that they’ve somehow partnered with the LLM towards unlocking their own unique creative genius to then go off on adventures of solipsistic delusions of grandeur, with the sycophantic AI reframing trite summaries of Wikipedia as the user’s uniquely brilliant discoveries of historical patterns or natural laws.

And something which feeds into this in a way that I think is underestimated is the proliferation of shitty freeware AI detectors. A squid ink barrage of popular detection tools that give you lots of false positives will both tend to reinforce the idea that it’s increasingly becoming impossible to actually discern AI slop from genuine human output, and also serve to support the delusions of individuals who in various ways consider an LLM to function as a genuine extension of their cognitive agency rather that something which effectively suppresses it.

It’s this infiltration of the digital simulacrum in not only the media content we’re mainlining, but as a substitute for our own creative output, for our own actual cognitive processes, that I consider to exemplify this new and unprecedented penetration of the uncanny. It’s not just that we encounter deepfakes and AI slop in the media flow, but that actual human individuals that we interact with now have integrated the hyperreal machinery in their very own communicative output.

This unholy fusion is aptly exemplified in a recent post by a rather well-known former Swedish journalist who recently fell victim to the LLM cult. He’s adopted this posture that genAI may well have a negative impact, but if you only use it in the right way, which only clever, high-IQ people like himself are capable of, it’s a miraculous tool for building a prosperous and equitable future. So the post in question is pretty funny in that he’s promoting and using AI to summarize and lament the fact that WW2 deepfakes now massively outnumber originals, which basically means that we’re approaching a situation where all of history can be falsified or memoryholed with a couple of clicks. The irony seems to be lost to this former journalist, however.

But I guess we see this all the more clearly in the reports around the recent events in Australia.

Apart from the perversity of everyone virtue-signalling over the Sydney incident, everyone tearing out their hair, laws being rewritten, massive outpourings of indignation and sympathy &c, all the channels, full coverage, while there’s nary a breath about the mass slaughter of kids in South Sudan just over a week ago — aside from all of this, clear and convincing images representing the victims as crisis actors and painting the event as a hoax are becoming viral even during the very first wave of mainstream media reporting of the events.

And there’s no way, not even in principle, to immediately verify or falsify either of the narratives.

This is the perfect recipe for maximizing cognitive dissonance. For seeding a general, pervasive distrust of everything. Which, of course, has the vast majority defaulting to authority as basic epistemic warrant, in line with the behavioural programming that was established during the covid event.

And right here is the system’s solution to the pervasive problem of hallucinations in large language models.

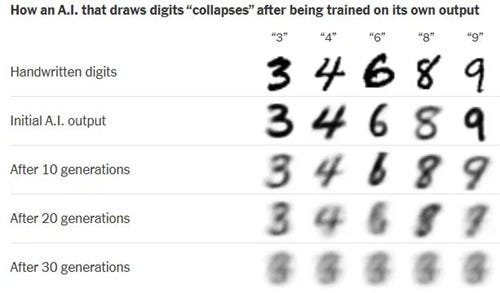

The flaw in all of the predictions of pervasive hallucinations or of local or global model collapse is that they overlook the potential stabilizing impact of the human factor. This is hardly surprising, since a premise of the very problem is a recursive feedback loop of AI-generated output — and a common foundation of this premise is the incontrovertible observation (mine included) that genuine human output doesn’t have a snowball’s chance in hell of keeping up with the slop machine.

But this unholy cognitive hybrid of human-guided genAI slop, entrenched through an exacerbated need for relational attachment, actually offers a possible solution. If the massive proliferation of AI output were to be strategically curated by humans at crucial junctures, an indefinite recalibration is conceivable.

In other words, you and I could be harnessed to work as the meta-prompt that keeps the machine operational.

Not necessarily functional in the sense of producing useful knowledge with regard to the actual needs of human societies, but stable enough to avoid the kind of radical incoherence that would preclude the system from fulfilling its core purposes of propaganda and consent manufacturing.

The key purpose of agential AI may well just be to shackle us to the machine and have us dream up the walls of our own prison, reproducing the conditions of our own enslavement — the endless reproduction of a hyperreal condition just alien enough to keep us in a state of permanent cognitive disarray, but sufficiently coherent and authoritative to maintain our loyal attachment.

Drowning in the pale of our own creation.

Name after name and none of them is familiar. They seem real, but something is wrong. You feel like a kid looking at stickers on the fridge: Truvant, The Apricot Company, World Games ‘34. You can almost see your hand reaching out for them. Scratch at the corners, see if they peel loose... This feels like the *most* important of all the thoughts; the one you truly must complete.

Jamais vu. The opposite of déjà vu. Not *already* seen, but *never* seen. Everything that should be familiar appears strange and new. Like some half-forgotten day in your childhood, only *now*. That’s the feeling you’ve been having. And for who knows how long? You should go and ask Joyce Messier about this -- what world are we in?

This is the fundamental question.

Disco Elysium.

My idea of today is typewriters, limited editions and local printers, bookshops, coffeehouses and libraries. Borrowing a leaf from the vinyl renaissance.

In terms of your other point, I completely agree and unfortunately the "smarter" an individual believes they are, the harder it is for them to accept they've been scammed. We see this everyday in terms of anyone grocery shopping or consuming MSM who isn't aware of the behind the scenes manipulation. Try pointing out the basics and people look at you like a martian speaking another language. A few years ago I read somewhere that intelligent people are also ironically easier to manipulate. But smart is where the party is, as intelligence at some point comes back to bite one in the ass as many intelligent people discover humility and have doubts, while smarty-pants have the ego syndrome of mediocrity overestimating itself.

Also, another thing I've noticed is here in the West we completely missed the concept of the ego we find in the East. Some of it was remedied by Freud (id) and Jung (Shadow), but few do the inner work as it's alien to our philosophies of daily living (and Freud being discredited doesn't help with the other interesting ideas). It's a shame we don't have a common thread of ideas we all pass through which incorporates ideas on ego, self, interior dialogue, etc. I think these would help mediocre intelligence people be more grounded.

Man that Maria Elena Milagro photo is so Tell Tale Heart come to life. if i wasn't decoupling from the internet so fast i'd use her face as my avatar. it MEANS something and i can't figure it out. i've been fascinated since i first read this the other day.