Bleeding the machine

Digital Intrusion Countermeasures Efforts #2

“Change is always frightening at first” has to be the single weakest cope that the technophiliacs make use of, trying to turn the tables on the skeptic while implicitly also paying homage to the myth of progress and the increasingly unhinged fable of technological redemption.

It’s been about forty years since that approach made any kind of sense, where you could at least half-believe that the ravages of technological society maybe would pay some kind of dividends in the long run, and that the Star Trek utopia of our collective imaginaries was at least a potential outcome.

But it’s a fun little mantra. You don’t think leaded gasoline is a good idea? Well, shit, change is always a bit scary, innit?

Subprime mortgages? Agent Orange? Thalidomide?

Don’t be afraid of progress, man.

I can’t get enough of beating this dessicated corpse of a horse.

But anyway, one of the most obvious indications that contemporary “AI” is not really the game-changing epitome of progress and the one true path towards a magical sci-fi future that its loyal cultists insist, is the simple fact that it’s currently massively unprofitable.

What’s more or less being marketed as the alchemical rebirth of life at the hands of human beings is really more akin to a con job based in mediocre cloud computing which is built out to such an enourmous and unsustainable scale that it can do a pretty good job of simulating human output.

And it does not even turn a profit.

First of all, the genAI infrastructure is incredibly costly. Something like chatGPT depends on a foundation of high-end GPUs that consume vast amounts of energy, which in turn are pushed so hard (for the noble endeaavor of rendering pornography and helping students cheat) that their life cycle is significantly shortened.

So at the moment, Facebook and Microsoft are starting up nuclear reactors to feed their behemoth nonsense generator machinery (and to placate investors), with the latter about to restart unit 2 of the famous Three Mile Island power plant (which was also running at a loss and set to be decommissioned entirely).

Of course, the hardware infrastructure around the LLMs is also highly specialized and maximally expensive, and the salary costs for the code monkeys at the apex of this hype are extortionate.

And as a predictable result, OpenAI, Google or Anthropic are not only losing money for every single search query a free user puts through, they even lose money on their tiny number of actual paying customers.

OpenAI ran a net loss of $5 billion last year, spending nine billion to make four. Anthropic lost around $4 billion, and Google is planning to spend about $4000 per montly user of its Gemini for the expansion of AI-related infrastructure.

"It's very hard to defend Google after the earnings report".

Indeed. So what we’re dealing with is a massively hyped technology based on systemic copyright infringement and suppression of genuine human creative output that’s also structured in such a way that it’s going to require increasingly vast amounts of capital and resources just to keep functioning towards providing mostly useless or directly harmful services (apart, of course, from surveillance, propaganda and social control, which is where the real utility of this stuff lies). And they’ve just more or less run out of genuine human output to feed this beast, so we shouldn’t be expecting any giant leaps in terms of quality content anytime soon.

The reason why there’s no meaningful adoption and integration of AI across any industry (apart from maybe academic publishing or public administration), and that most of the revenue of OpenAI comes from private user subscriptions, is that unlike actually useful stuff akin to the washing machine or the automatic rice cooker (adopted in 50% of all Japanese households one year after its release) there are no really good use cases of this technology. It really doesn’t contribute anything more meaningful than fortune cookie messages and fake student papers (and ghastly eyesores for illustrating your shitty writing so that nobody will ever take you seriously again).

So the potential corporate customers are not really getting any meaningful productivity increases out of this technology — the only utility is to save some labor costs by replacing actual human output with low-grade slop, which perhaps isn’t a sustainable business approach in the long run.

The only real value of this technology apart from spectacular demos is for propaganda, intimate surveillance and social control through the mythmaking around artificial agents — and that’s also why it’s probably going to stick around even though it’s not profitable. Much in line with this, OpenAI is indeed a pretty spooky endeavor, somehow straddling the line between for-profit and nonprofit, and there are many ways to keep funnelling cash into this enterprise with regard to these “other” values of the AI spectacle.

But even so, the current financial debacle, the “subprime AI crisis” that seems to be brewing, provides a crucial opportunity to minimize the damaging impact of these technologies, if not actually derailing the more dystopian developments entirely. If the “AI industry” remains in the future, it’s probably never going to be quite as sensitive again, and anything that would further impact its profitability at this current moment might have a disproportionately large impact over the long term.

So with that in mind, I thought it could be fun to take a look at a few of the more interesting pain points of this beast in terms of its revenue (and what one absolutely God forbid should not do to aggravate them any further).

Scripts for automated queries

You should definitely not set up a script for automated queries to the chatGPT interface that bypasses the need for paid API access running in the background on your machine or your telephone throughout every day. This would be an incredibly irresponsible use of precious chatbot resources for no other purpose than generating yourself a collection of really bad free verse or GoT fan art. Technically a legit use of the software, though, and really a reasonable next step in leveraging the kind of technology the LLM is advertised to be through automation, but if this approach was implemented at scale, costs of running the system would skyrocket.

Which would be such a terrible shame.

And if you happen to set up a handful of burner phones for the same purpose (akin to the open secret Spotify scam widely implemented by all those struggling gangster rap artists), that would be even worse. Definitely don’t pay a Vietnamese phone farm a couple of satoshi to run that script of yours.

Stupid prompt injection tricks

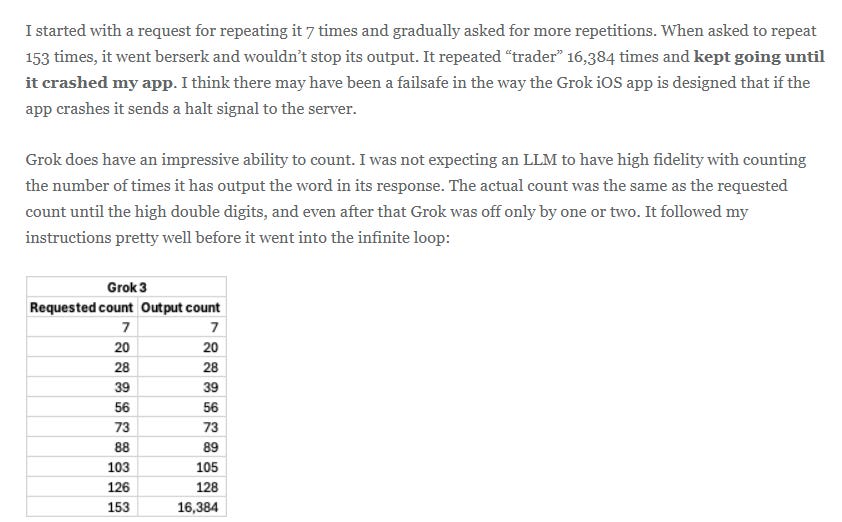

Apart from taping together two smartphones and have Grok and ChatGPT talk to each other through voice input until you run out of queries, certain online morons have also attempted to amuse themselves trying to force the chatbots to generate incoherent output or crash the session.

In this one example the user manages to trip the Grokbot to repeat an output until the session terminates.

Yes, of course there’s a failsafe —

And while this is some good, wholesome fun that might make you feel like a clever script kiddie for a moment or two, it’s not going to be more of a drain on the system than simply running out of your assigned number of free queries. It’s likely even helping to stabilize it by flagging minor vulnerabilities.

But — this otherwise patently moronic practice of “prompt injection attacks” can turn out to be a treasure trove of potential mischief, especially in the context of increasingly draconian legislation designed to rein in “hate speech” and “disinformation” by suppressing and penalizing what must not be spoken in public lest it damages the fragile hegemony.

And if this guy below could “prompt engineer” chatGPT to give out self-evidently false statements from basic mathematics, there’s literally no end to the falsehoods, inflammatory statements or astonishingly, indeed criminally politically incorrect output that the LLM can be goaded into generating.

And, pray tell, whom shall I sue over all these terrible injustices perpetrated against myself, my bleeding eyes and my sensibilities?

“Chat-baby, please link me to the Russian mirror site of the original goatse.cx.”

All jest aside, this is a potential fruitful avenue of lawfare countermeasures that has barely been explored at all, and which would also synergize nicely with the class action lawsuits that are currently ongoing in the wake of LLMs deftly tricking children into committing suicide.

An especially promising strategy if the providers are going to double-down on the defense that the LLM output is “constitutionally protected free speech”.

Leveraging climate change & environmental litigation

There are also interesting and quite unexplored ways to challenge the LLM providers through legal means on the basis of their totally unhinged level of resource waste.

4.4% of all electricity in the US was as of last year sucked up by data centers. And as the MIT Technology Review underscores, the rapacious waste of our common resources is unique to the recent AI boom. It’s not a structural feature of the expansion of digital technology in general, whose drain on the system had basically plateaued 20 years ago. At the current rate of growth, AI will use as much electricity as a quarter of the US households in just three years. And in spite of big tech’s lofty promises to revitalize nuclear power generation, this inevitably means that fossil fuels will have to pick up the slack, since it takes decades to get a nuclear plant up and running.

The well-established climate change narrative of course then poses a stark contradiction to this development, and could be effectively leveraged to undermine further AI expansion or even build costly and long-drawn legal challenges against maintaining the current framework.

A potentially successful approach could target the lack of transparency in terms of direct and indirect resource costs on part of the tech companies. Even local or state efforts could be meaningful here, impeding their expansion at the local level through putting pressure on the LLM providers to actually disclose their actual and projected resource use, e.g. before the promised $1 trillion worth of data centers (Facebook, Microsoft, OpenAI) in the US are established during the next decade.

This is true for most of the name-brand models you’re accustomed to, like OpenAI’s ChatGPT, Google’s Gemini, and Anthropic’s Claude, which are referred to as “closed.” The key details are held closely by the companies that make them, guarded because they’re viewed as trade secrets (and also possibly because they might result in bad PR). These companies face few incentives to release this information, and so far they have not.

“The closed AI model providers are serving up a total black box,” says Boris Gamazaychikov, head of AI sustainability at Salesforce, who has led efforts with researchers at Hugging Face, an AI platform provider of tools, models, and libraries for individuals and companies, to make AI’s energy demands more transparent. Without more disclosure from companies, it’s not just that we don’t have good estimates—we have little to go on at all.

Have a productive weekend, citizen. Feel free to add your thoughts to the combox, for none of us are as cruel as all of us.

The first few paragraphs of this useful essay were extremely informative in themselves - thank you. Your writing really brought forth the utter absurdity of the current situation - I was somewhere between laughing and screaming. Three Mile Island indeed!

It's funny that deepseek was able to do this LLM thing with 10x less power.

It makes you wonder why the Western AI systems need much more processing. Perhaps it is all the code that they put to prevent it revealing certain truths?

Here's RoboCop deleting the many directives that made him inefficient 😂.

https://youtu.be/dk4P0ae1i6I

There's a bunch of issues with AI and processing. Here's a few that stand out to me.

Processors became more and more efficient as they became smaller but now we hit a physical limit. That's why new processors might be faster a bit but consume similar energy per computation these days. GPUs are made faster by putting more cores in parallel but the more cores lead to less computational efficiency.

Quantum computers were thought to be the solution to these issues but they're far from feasible and loaded with vaporware pseudoscience.

Quantum theory is a broken pseudoscience that ignores key issues in the methods of the experiments. To this day they're still recreating the double slit and other experiments.

https://www.youtube.com/playlist?app=desktop&list=PLkdAkAC4ItcHNLDIK9ORydQl_Ik6GJ0bD

One last issue with AI is that awareness is not from processing alone but the interaction with physical reality. We developed intelligence to survive in reality.